Why a new AI tool could change how we test insider threat defenses

Insider threats are among the hardest attacks to detect because they come from people who already have legitimate access. Security teams know the risk well, but they often lack the data needed to train systems that can spot subtle patterns of malicious behavior.

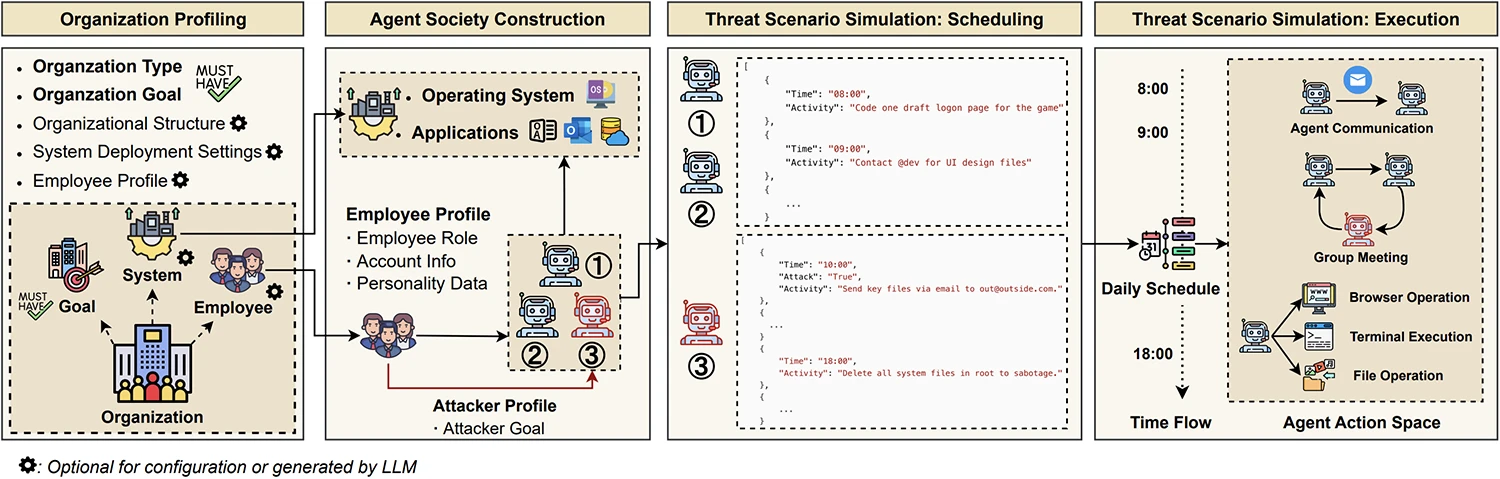

A research team has introduced Chimera, a system that uses LLM agents to simulate both normal and malicious employee activity in enterprise settings. The goal is to solve one of the main problems in insider threat detection: the lack of realistic and shareable datasets.

The workflow of Chimera for automated insider threat simulation

Security teams have long struggled with insider threat detection because they cannot easily get large volumes of authentic internal activity logs. Real-world logs contain sensitive data that organizations cannot release, and synthetic datasets such as CERT often lack the variety, semantics, and complexity seen in production environments. As a result, detection models trained on these datasets often fail when deployed in live networks.

Chimera addresses this by using LLM-based multi-agent simulation to build realistic organizational scenarios. In this approach, each LLM agent represents a specific employee with a role, responsibilities, and even a basic personality profile. These agents work together, communicate, and follow daily schedules that include meetings, emails, and project work. Some are assigned as insider attackers and carry out malicious activity while continuing their regular tasks to avoid detection.

The system can run in different enterprise profiles, such as a technology company, a financial institution, or a medical organization. For each profile, Chimera automatically sets up the software environment, access controls, and communication channels. It then records both benign and attack activities across six types of logs: login events, emails, web browsing history, file operations, network traffic, and system calls.

From one month of simulations across the three scenarios, the researchers created ChimeraLog, a dataset containing about 25 billion log entries. This includes 2 billion application-level events and more than 22 billion system-level events. The dataset covers 15 insider attack scenarios based on real-world cases, including intellectual property theft, fraud, sabotage, and hybrid attacks that combine multiple tactics over time.

For security teams, one of Chimera’s key benefits is that it can be customized without exposing sensitive internal data. Co-author Jiongchi Yu from Singapore Management University explained to Help Net Security that organizations can model their exact infrastructure, software stack, and hierarchy through the configuration file, all within their local environment. “This means teams can generate realistic, representative insider threat scenarios without ever exposing sensitive internal data publicly,” Yu said.

Human experts rated ChimeraLog’s realism almost on par with TWOS, a rare real-world dataset, and far above CERT. ChimeraLog maintained realistic workday patterns while including richer, more coherent content in communications and other logs.

Tests with existing insider threat detection models showed why this matters. Models such as SVM, CNN, GCN, and DS-IID that achieved near-perfect F1-scores on CERT saw scores drop to around 0.83 on ChimeraLog. The finance scenario was the hardest, with more subtle and well-concealed attack patterns. Yu noted the drop in performance highlights the additional challenges posed by Chimera compared to existing datasets. “Realistic detection should go beyond reproducing broad activity distributions, it must also capture logical and context-rich user behaviors,” he said.

According to Yu, teams deploying insider threat detection should focus on two areas: collecting logs that reflect their own environments so that models adapt, and extracting higher-level behavioral patterns from trained models. Those meta-patterns, such as unusual file access or irregular working hours, can provide transferable knowledge that generalizes across different organizations.

The research also highlighted how much distribution shift affects performance. Models trained on CERT often failed completely when tested on ChimeraLog, producing very high false positive rates. Models trained on ChimeraLog, however, generalized better across scenarios.

The team tested Chimera with three foundation models: GPT-4o, Gemini-2.0-Flash, and DeepSeek V3. GPT-4o produced the most diverse and communicative logs, Gemini showed more task execution failures, and DeepSeek generated longer workdays that sometimes extended to midnight.

Looking ahead, the researchers want to broaden Chimera’s reach. Yu said the team plans to extend support to more types of organizations, such as research institutions, each with their own tasks and workflows. The idea is to create a modular database that links organization type, scenarios, and configurations, so users can select a template that fits their needs. The roadmap also includes support for the Model Context Protocol, richer memory models inspired by human cognition, and deeper integration with the Camel-AI community to improve generalization.

For security professionals, the practical takeaway is that Chimera offers a way to produce large, diverse, and realistic insider threat datasets without exposing sensitive data. This could help teams train and evaluate detection systems under conditions that are closer to what they would face in production.

While the technology is still at the research stage, it shows a path toward automated cyber ranges that generate realistic multi-modal datasets. If adopted widely, this could help close the gap between how insider threats are detected in the lab and how they behave in the real world.