Search engine hack innovation

Attackers are increasingly leveraging the power of search engines, like Google, to successfully carry out automated cyber attacks against vulnerable websites, according to Imperva.

Attackers are increasingly leveraging the power of search engines, like Google, to successfully carry out automated cyber attacks against vulnerable websites, according to Imperva.

Dubbed “Google Hacking,” hackers armed with a browser and specially crafted search queries (“Dorks”), are using botnets to generate more than 80,000 daily queries, identify potential attack targets and build an accurate picture of the resources within that server that are potentially exposed.

By automating the query and result parsing, the attacker can carry out a large number of search queries, examine the returned results and get a filtered list of potentially exploitable sites in a very short time and with minimal effort.

Because searches are conducted using botnets, and not the hacker’s IP address, the attacker’s identity remains concealed.

“Hackers have become experts at using Google to create a map of hackable targets on the Web. This cyber reconnaissance allows hackers to be more productive when it comes to targeting attacks which may lead to contaminated web sites, data theft, data modification, or even a compromise of company servers,” explained Imperva’s CTO, Amichai Shulman. “These attacks highlight that search engine providers are need to do more to prevent attackers from taking advantage of their platforms.”

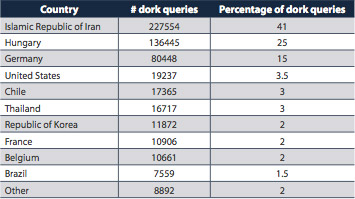

Countries of hosts issuing dork queries:

Botnet based search engine mining

In order to block automated search campaigns, today’s search engines deploy detection mechanisms which are based on the IP address of the originating request. Imperva’s investigation shows that hackers easily overcome these detection mechanisms by distributing their queries across different compromised machines i.e. the botnets.

During May and June its Application Defense Center (ADC) observed a specific botnet attack on a popular search engine. For each unique search query, the botnet examined dozens and even hundreds of returned results using paging parameters in the query.

The volume of attack traffic was huge: nearly 550,000 queries (up to 81,000 daily queries, and 22,000 daily queries on average) were requested during the observation period. The attacker was able to take advantage of the bandwidth available to the dozens of controlled hosts in the botnet to seek and examine vulnerable applications.

Recommendations for search engines

Search engine providers should start looking for unusual suspicious queries – such as those that are known to be part of public dorks-databases, or queries that look for known sensitive files (/etc files or database data files).

A list of IPs suspected of being part of a botnet and a pattern of queries from the botnet can be extracted from the suspicious traffic that is flagged by the analysis. Using these black-lists, search engines can then:

- Apply strict anti-automation policies (e.g. using CAPTCHA) to IP addresses that are blacklisted. Google has been known to use CAPTCHA in recent years when a client host exhibits suspicious behavior. However, it appears that this is motivated at least partly by desire to fight Search Engine Optimization and preserve the engine’s computational resources, and less by security concerns. Smaller search engines rarely resort to more sophisticated defenses than applying timeouts between queries from the same IP, which are easily circumvented by automated botnets.

- Identify additional hosts which exhibit the same suspicious behavior pattern to update the IPs blacklist.

Search engines can use the IPs black list to issue warnings to the registered owners of the IPs that their machines may have been compromised by attackers. Such a proactive approach could help make the Internet safer, instead of just settling for limiting the damage caused by compromised hosts.

The complete report is available here.